#AI governance

#AI governance

[ follow ]

#ai-governance #ai-safety #autonomous-weapons #government-regulation #ai-ethics #anthropic #risk-management

#ai-governance

DevOps

fromThe Hacker News

1 day agoNew RFP Template for AI Usage Control and AI Governance

Organizations have AI security budgets but lack clear requirements for AI governance solutions, requiring a structured evaluation framework focused on interaction-level control rather than application cataloging.

Artificial intelligence

fromwww.theguardian.com

2 days agoOpenAI amends Pentagon deal as Sam Altman admits it looks sloppy'

OpenAI is revising its Pentagon AI contract after CEO Sam Altman acknowledged the rushed deal appeared opportunistic, adding explicit restrictions against domestic mass surveillance and NSA deployment.

Artificial intelligence

fromwww.theguardian.com

2 days agoTrump is using AI to fight his wars this is a dangerous turning point | Chris Stokel-Walker

AI militarization poses significant risks, with recent reports showing AI tools used in military operations affecting regime change and causing casualties in Venezuela and Iran.

fromComputerworld

6 days agoAI doesn't think like a human. Stop talking to it as if it does

Autonomous agents take the first part of their names very seriously and don't necessarily do what their humans tell them to do - or not to do. But the situation is more complicated than that. Generative (genAI) and agentic systems operate quite differently than other systems - including older AI systems - and humans. That means that how tech users and decision-makers phrase instructions, and where those instructions are placed, can make a major difference in outcomes.

Artificial intelligence

Artificial intelligence

fromComputerWeekly.com

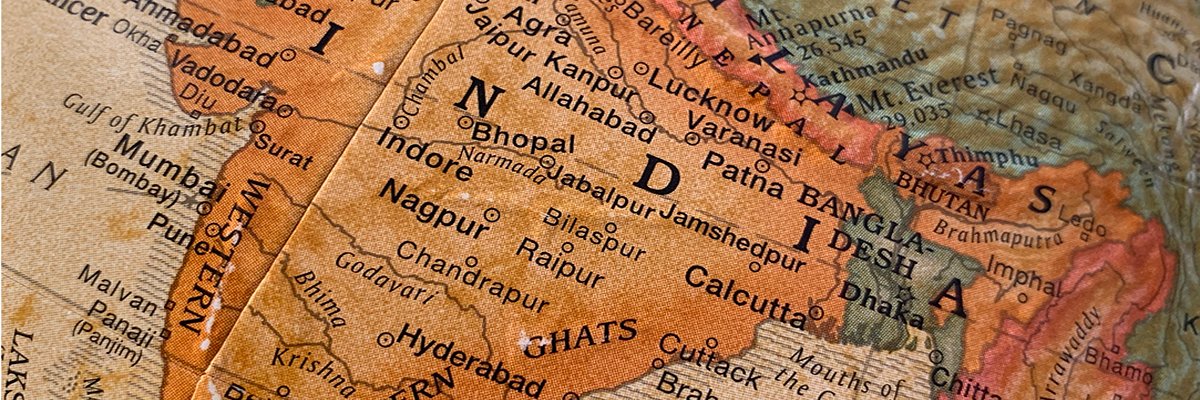

6 days agoIndia AI Impact Summit: Open source gains ground, but sovereignty tensions persist | Computer Weekly

Open source AI development gained political prominence at the India AI Impact Summit, shifting focus from safety risks toward inclusive economic development in the Global South through multilateral cooperation.

Artificial intelligence

fromFortune

1 week agoFormer IRS Commissioner: Here's how we used AI to create immediate value when taxpayers scrutinized every dollar | Fortune

Companies must identify urgent pain points, apply AI practically, measure impact, and pursue productivity, sensitive-process, and purpose-built AI to translate investment into measurable returns.

fromComputerWeekly.com

1 week agoUK AI alignment project gets OpenAI and Microsoft boost | Computer Weekly

During the AI Impact Summit in India, the UK government announced that £27m is now available for AI alignment research, backing some 60 projects. The project combines grant funding for research, access to compute infrastructure and ongoing academic mentorship from AISI's own leading scientists in the field to drive progress in alignment research. Without continued progress in this area, increasingly powerful AI models could act in ways that are difficult to anticipate or control, which could pose challenges for global safety and governance.

Artificial intelligence

fromTechCrunch

1 week agoReload wants to give your AI agents a shared memory | TechCrunch

Reload is a platform that lets organizations manage their AI agents across teams and departments. Companies can connect agents, regardless of who built them (whether by a third party or internally), assign them roles and permissions, and track the work they perform. "Reload acts like the system of record for AI employees, providing visibility, coordination, and oversight as agents operate across functions," said Asare, the company's CEO.

Artificial intelligence

fromBusiness Insider

2 weeks agoThe biggest names in AI are gathering for a summit in India. Here are 5 of the biggest takeaways.

It's gonna be something like 10 times the impact of the Industrial Revolution, but happening at 10 times the speed, probably unfolding in a matter of a decade rather than a century,

Artificial intelligence

fromComputerworld

2 weeks agoApple study shows why we want to control AI

On a personal basis, that means people using AI services want to be able to veto big decisions such as making payments, accessing or using contact details, changing account details, placing orders, or even just seeking clarity during a decision-making process. Extend this way of thinking to the working environment and the resistance is likely to be equally strong in professional settings.

Artificial intelligence

fromAxios

3 weeks agoOpenAI and Anthropic are picking public fights

Anthropic pledged to keep its large language model, Claude, ad-free, alongside a commercial poking at OpenAI, which is testing ads in ChatGPT. OpenAI CEO Sam Altman fired back with a 420-word post on X, calling the ad "dishonest." Altman was already fending off rumors about OpenAI's relationship with Nvidia, after the Wall Street Journal reported the chipmaker was pulling back from a proposed $100 billion investment. Reuters' sources said OpenAI has been exploring alternatives to Nvidia's chips.

Artificial intelligence

fromInfoQ

3 weeks agoNext Moca Releases Agent Definition Language as an Open Source Specification

Moca has open-sourced Agent Definition Language (ADL), a vendor-neutral specification intended to standardize how AI agents are defined, reviewed, and governed across frameworks and platforms. The project is released under the Apache 2.0 license and is positioned as a missing "definition layer" for AI agents, comparable to the role OpenAPI plays for APIs. ADL provides a declarative format for defining AI agents, including their identity, role, language model setup, tools, permissions, RAG data access, dependencies, and governance metadata like ownership and version history.

Artificial intelligence

California

fromwww.theiplawblog.com

3 weeks agoThe Briefing: Part One: CCPA's New Rules on Automated Decision making Technology (ADMT)

California's amended CCPA regulations now regulate Automated Decision-Making Technology, extending oversight from data collection to businesses' use of algorithms and AI in decisions.

fromThe Verge

4 weeks agoSam Altman responds to Anthropic's 'funny' Super Bowl ads

But I wonder why Anthropic would go for something so clearly dishonest. Our most important principle for ads says that we won't do exactly this; we would obviously never run ads in the way Anthropic depicts them. We are not stupid and we know our users would reject that. I guess it's on brand for Anthropic doublespeak to use a deceptive ad to critique theoretical deceptive ads that aren't real, but a Super Bowl ad is not where I would expect it.

Artificial intelligence

Artificial intelligence

fromZDNET

1 month agoForget the chief AI officer - why your business needs this 'magician'

Chief AI Officers are increasingly appointed, but organizations debate whether CAIOs, CIOs, CDOs, or distributed leadership best ensure effective AI adoption, governance, and productivity.

fromComputerWeekly.com

1 month agoSkills key to successful AI adoption, says IBM | Computer Weekly

AI is no longer just a tool for efficiency; it's becoming a growth engine for the enterprise. With UK AI investment set to increase significantly in the next four years, success will hinge on integrating AI into core business strategies and reskilling the workforce. Organisations that act decisively, with the appropriate governance and controls in place for AI, will be the ones defining competitive advantage tomorrow.

Artificial intelligence

Artificial intelligence

fromFuturism

1 month agoAnthropic CEO Warns That the AI Tech He's Creating Could Ravage Human Civilization

AI industry leverages fear to secure investment while AI poses existential risks including job loss, concentration of power, sexualization harms, bioweapons, and potential global tyranny.

[ Load more ]